@liane, Unsplash.com

“We do not see things as they are, we see them as we are.” – Source

What is trust? According to Merriam-Webster, it is the assured reliance on the character, ability, strength, or truth of someone or something. Yet go on Facebook, Youtube, Twitter or pretty much any social network, with the exception of TikTok, on any given day, and you’ll see truth debated and facts disregarded among your own friends and family. Now, conspiracy theories, disinformation, #fakenews, bots, paid advertising, extreme left and right media, massive personal data breaches, and even our country’s leaders, are relentlessly pummeling us from all sides, influencing us to question our truth. Add to that the threat of artificial intelligence (AI) and synthetic deep fake videos, and our world is headed toward an existential crisis that we, as a society, won’t see coming. Trust has now become a precious commodity.

Trust is a critical pillar in any relationship, in every transaction and action, in every capacity of our lives. Without trust, there is no assurance. And yet right now, we seem to be at a flashpoint where people believe in their truth versus your truth, eroding or preventing trust in even the most basic of interactions. This is not normal. At the same time, it is the new normal.

According to the Edelman’s annual “Trust Barometer” report, a staggering 73 percent worry about false information or fake news being used as a weapon. And, they should be worried. What’s more, according to a new report on artificial intelligence AI and work published by Oracle, 64 percent of people would trust a robot more than their manager and half have turned to a robot instead of their manager for advice.

In Truth We Trust?

In 2016, Cambridge Analytica took possession of extremely personal, and ill-gotten data sets, from Facebook that allowed the company to develop deep psychograph targeting of advertisements. These incredibly detailed data sets were intentionally (and effectively) used to stoke fear, anger and divide among American citizens heading into the Presidential election. An investigation by Britain’s Channel 4 caught Cambridge Analytica executives boasting about their ability to extort politicians by sending women to entrap them and then spread propaganda to help clients. In part, they appeared to admit that preying on the public’s fears was profitable.

You’d think that all of this would have forced a reset on the importance of trust. No. Instead our own belief systems and cognitive biases activated personal defense mechanisms to convince us that nothing was amiss in our ability to perceive truth and filter out disinformation.

The concept of “our truth” is now at the source of the polarization that’s dividing society at every level and every turn. Trust is no longer a foundational currency in this model. Instead, it’s about attention, Likes, and validation as currencies. Take for instance Trump supporter, Mark Lee, who declared on CNN in 2017 that “If Jesus Christ gets down off the cross and told me Trump is with Russia, I would tell him, ‘Hold on a second. I need to check with the president if it’s true.’” While an extreme example, its more common than we may think.

In the Fall of 2019, a bipartisan Senate report revealed that over half the US population in 2016 was subjected to Russian propaganda in Facebook. This is fact. It is also a fact that is actively discredited by the leader of the free world because it’s also an inconvenient truth against his ego. In this case, his followers trust him implicitly and as a result, they do not trust the truth from others, even if it is rooted in fact.

Disinformation Disguised as Truth to Build Communities Upon False Trust

Disinformation and distraction campaigns are now an everyday occurrence run in plain sight. The intention of misdirecting people for the benefit of someone or something else has been normalized. What’s more disturbing is that everyday people are taking to popular social networks to share conspiracy theories masquerading as legitimate news or developments just for the sake of sowing discord. Trust is now tied to the perception of truth.

InfoWars host Alex Jones persisted that the tragic mass shooting at Sandy Hook Elementary School in 2012 was a hoax staged by gun control advocates. Eventually his followers believed him and created a movement to attempt to discredit and harass grieving parents. Then there’s #Pizzagate where a series of viral videos claimed that Hilary Clinton and Democrats were secretly keeping children in the basement of a pizza restaurant as part of a secret sex ring. One day, a believer showed up with an assault rifle in the attempt at saving the children he believed to be locked in the basement. Trust in these cases served as the catalyst for action based on the opposite of truth.

The list of viral disinformation and conspiracy theories goes on and on until one day, enough people believe it to be their truth. They then act accordingly. And, that’s when it’s too late. We are at a tipping point.

Exposing Mistruths and Misplaced Trust

More than 90 percent of Americans receive their news online with 50 percent relying on their favorite social networks for information. But history has already demonstrated that without prioritizing the removal of harmful disinformation or promoting media literacy and critical thinking among users, truth is, unfortunately, open to interpretation. Said another way, entrusting the public to make the best decisions based on the information presented to them is proving dangerous.

Senator Elizabeth Warren set out to make a point of how easy it is to spread disinformation and the need to take action to protect truth. On October 11, 2019, Warren published a set of Facebook ads falsely claiming that “Mark Zuckerberg had endorsed Donald Trump for re-election.” The ad went on to explain how Facebook and Zuckerberg essentially had “given Donald Trump free rein to lie on his platform — and then to pay Facebook gobs of money to push out their lies to American voters.

Case in point, in one of the many misleading Donald Trump ads promoted on Facebook, his campaign spread an already debunked conspiracy theory about former Vice President Joseph R. Biden Jr. and Ukraine, “Learn the truth. Watch Now!”. Mr. Biden’s campaign demanded that Facebook take down the ad due to its blatant lies, but instead, the network chose to let it stand. Not ironically, Facebook removed ads from Warren’s campaign in March 2019 calling for the breakup of Facebook.

Shareholders Profit from Mistruths and Propaganda

If the truth is so important, then why aren’t technology and government leaders taking action to protect users and citizens on commercial platforms?

Free speech. Profits. Agendas. The reality is that algorithms favor emotionally charged content because it sparks engagement and delivers ROI to advertisers and shareholders. Provocation is good for business regardless of its effects. It also creates insular networks of likeminded people that act and react to everything in which they perceive as their truth. Its commercial psychological warfare and we are its audience and guinea pigs.

Just recently, Zuckerberg defended the platform’s stance on allowing disinformation in campaign ads. He told The Washington Post ahead of a speech at Georgetown University, “People worry, and I worry deeply, too, about an erosion of truth. At the same time, I don’t think people want to live in a world where you can only say things that tech companies decide are 100 percent true. And I think that those tensions are something we have to live with.”

He continued, “In general, in a democracy, I think that people should be able to hear for themselves what politicians are saying,” Zuckerberg continued. “Often, the people who call the most for us to remove content are often the first to complain when it’s their content that falls on the wrong side of a policy.”

This too is debatable.

The Telecommunications Act of 1996, specifically Title V (also known as the Communications Decency Act) allowed internet companies to host untruthful information in the name of free speech. The caveat was that the host could not endorse it. This is arguable now because the basis of a free and open internet is not the same thing as a commercial network such as Facebook, Youtube, Twitter, Google, Reddit, et al.

The conversation is then regulated to defending the democratization of information and free speech without also exploring productive solutions and innovative possibilities for protecting the health and well-being of democracy and people. To an everyday user, what’s the difference between Facebook or Youtube and the internet?

Thoughtful deliberation and discussion are productive, healthy and necessary. But it’s getting increasingly difficult at a time when everyone is right in their beliefs and perspectives. How can anyone hear anyone else when everyone is yelling over each other. How can anyone feel empathy when passion and angst are the fuel for engagement. How does anyone know where to focus when attention is so fragmented and constantly distracted away from important information and moments. There’s no time or space to think and deliberate deeply about important subjects. But that must change.

If I can be Honest with You…

Truth and reality are now open to interpretation because it is how we make them our own.

I once said that the good thing about social media is that it gave everyone a voice. The dangerous thing about it, is that it gave everyone a voice. With social media comes great responsibility…and accountability.

The solutions to reset the balance are complex but attainable. We live in disruptive times and these times require disruptive new rules to change our norms and behaviors for the betterment of society (and democracy).

Trust in a digital age cannot be optional or a luxury afforded by only a few.

Let’s start with transparency and work our way to trustworthiness. One could argue that transparency assumes there is reason not to trust something or someone. It’s sort of like starting a sentence with “If I can be honest…” or “Honestly…” Honesty is the assumption. But maybe, it takes articulation to remind people of intent.

Why alienate or upset users (or customers) when we could build productive, informative likeminded communities. Perhaps, now transparency and truth have to be emphasized as a point of differentiation and value.

Change the Business Model to Promote Truth and Positive and Productive Experiences

If distraction, disinformation, lies, hate and conspiracy theories are good for business, then change the business model. Our democracy, relationships, ability to access truth and our overall sanity and wellness should not be compromised in the name of commercial pursuits or profit. Turns out it’s not too much to ask. People are looking for leadership and they’re looking for it in new and unlikely places.

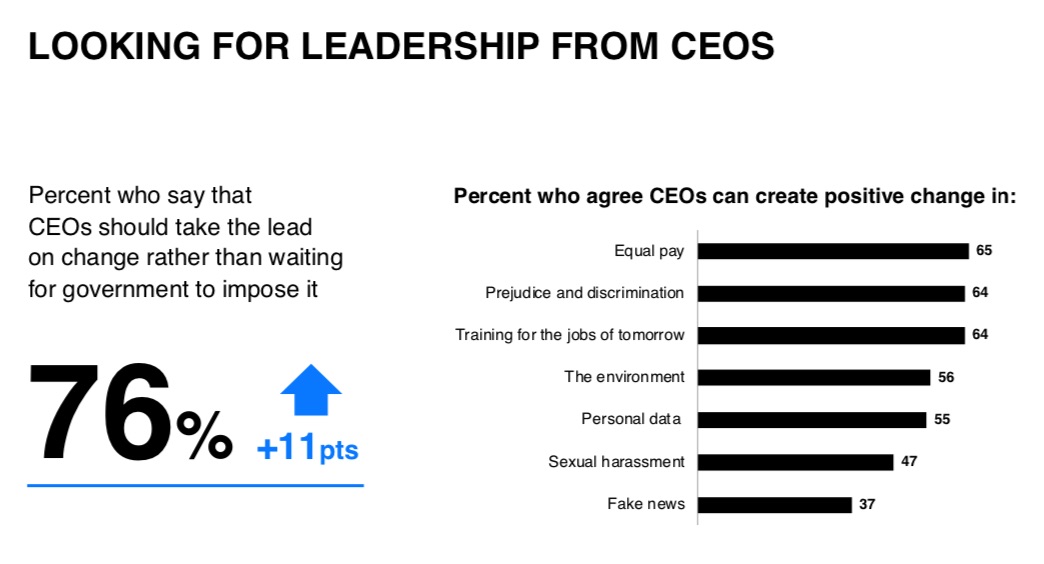

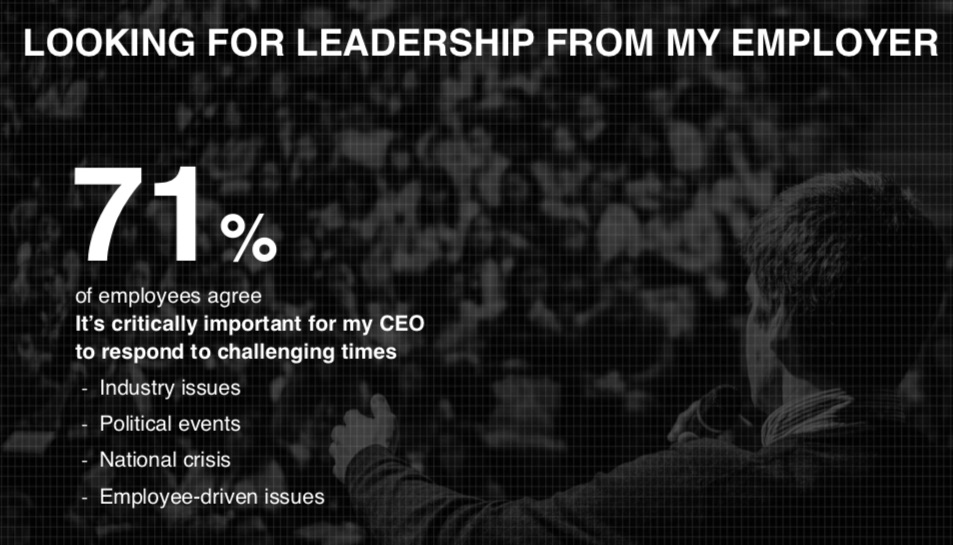

According to Edelman’s Trust Barometer, 76 percent of people say that CEOS can create positive change in a variety of important areas of work and life. This is up 11 points! Previously, trust in CEOs had been eroding year after year.

A significant majority of employees too (71 percent) are looking for leadership to respond to challenging times.

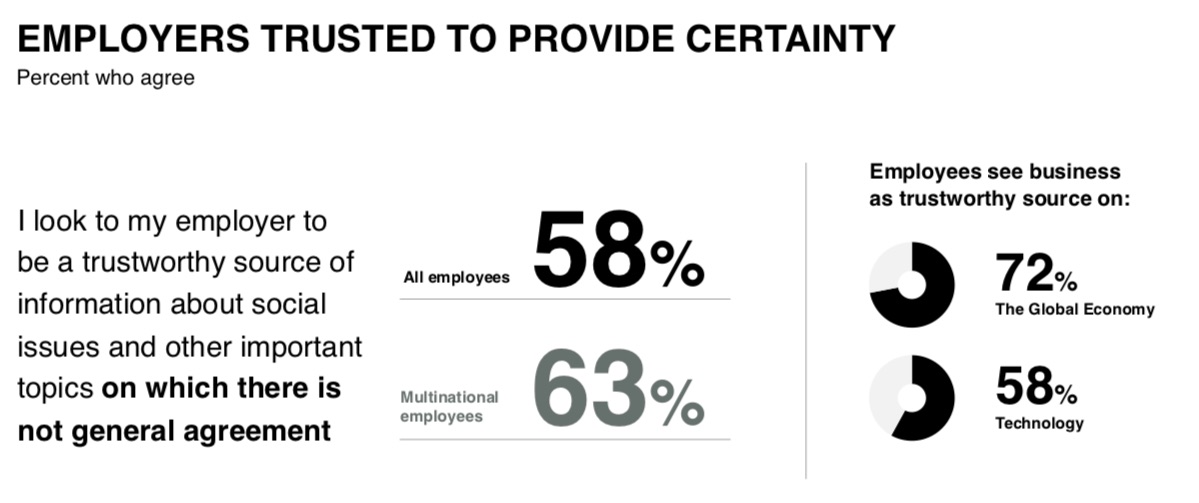

Beyond corporate social responsibility (CSR), smart brands are connecting business models to social issues and corporate purpose and values. In fact, employers are now entrusted and trusted with becoming a trustworthy source of information about social issues beyond work.

This is time for new leadership. This is a time to earn and build trust among the people that matter most to you personally and professionally. It’s also time for new business models and also advertiser demands to ensure that the connection between platform, brand and user is safe and beneficial.

TikTok, for example, announced that it would not accept political advertisements of any kind. It simply doesn’t want to alienate users.

“Our primary focus is on creating an entertaining, genuine experience for our community,” wrote Blake Chandlee, TikTok’s VP of global business solutions, in a company blog post. “While we explore ways to provide value to brands, we’re intent on always staying true to why users uniquely love the TikTok platform itself: for the app’s lighthearted and irreverent feeling that makes it such a fun place to spend time.”

“Any paid ads that come into the community need to fit the standards for our platform, and the nature of paid political ads is not something we believe fits the TikTok platform experience,” Chandless wrote. “To that end, we will not allow paid ads that promote or oppose a candidate, current leader, political party or group, or issue at the federal, state, or local level—including election-related ads, advocacy ads, or issue ads.”

Popular online image sharing community Imgur recently announced that it would no longer support Reddit’s NSFW communities because they put its “business at risk.”

In a blog post published earlier this week, the company shared that “over the years, these pages have put Imgur’s user growth, mission, and business at risk.” The post emphasized the company’s desire for “Imgur to be a fun and entertaining place that brings happiness to the Internet for many, many years to come.”

Then on October 30th, 2019, Twitter followed suite. CEO Jack Dorsey announced that Twitter would no longer sell political advertising globally. To him, it’s a moral obligation to protect civic discourse and democracy. “A political message earns reach when people decide to follow an account or retweet. Paying for reach removes that decision, forcing highly optimized and targeted political messages on people. We believe this decision should not be compromised by money,” he explained.

He also spelled out the differences between freedom of speech and freedom of reach, “This isn’t about free expression,” he said. “This is about paying for reach. And paying to increase the reach of political speech has significant ramifications that today’s democratic infrastructure may not be prepared to handle. It’s worth stepping back in order to address.”

It seems that one approach to truth in the digital age is to be the truth. Let the truth draw together a community where users, advertisers and the network mutually benefit. Trust becomes a currency.

This approach leans on tried and true human attributes where individuals (and the networks where they interact) earn social capital and the reward for trust is social reciprocity. The Organization for Economic Co-operation and Development (OECD) defines social capital as “networks together with shared norms, values and understandings that facilitate co-operation within or among groups.”

Norms are society’s unwritten rules. Values are the core of how we live our lives. Together they define how we live together. As the OECD describes, “values – such as respect for people’s safety and security – are an essential linchpin in every social group. Put together, these networks and understandings engender trust and so enable people to work together.”

Like begets like. Reciprocity is a gift we give to those whom we believe to give us value. Social capital is the basis for rethinking our relationship with networks, connections and information to find and surround ourselves with truth.

What Do You Stand For? #WDYSF

For leaders, this is an incredible opportunity to engage communities around your purpose and how that purpose aligns with truth. For advertisers, your brand is defined by the social capital of the networks and how they enrich or expend the value of user (aka customer) experiences and connections. Commercial networks, you are not the internet. You are not beholden to the same standards as a free and open web. Perhaps there are alternatives to giving users access to truth or an absence of disinformation to boost trust and ultimately the social capital of the network and that of your users.

These are just some of the ideas to get a new conversation started. If we place truth at the center of the experience, if we protect users and advertisers, the outcome creates trust as a lucrative currency. We earn attention and relationships based on the common good of the community.

In a digital age where darkness is pervasive, be the light. We have to shape and influence the world we want to see.

Brian Solis | Author, Keynote Speaker, Futurist

Brian Solis is world-renowned digital analyst, anthropologist and futurist. He is also a sought-after keynote speaker and an 8x best-selling author. In his new book, Lifescale: How to live a more creative, productive and happy life, Brian tackles the struggles of living in a world rife with constant digital distractions. His previous books, X: The Experience When Business Meets Design and What’s the Future of Business explore the future of customer and user experience design and modernizing customer engagement in the four moments of truth.

Invite him to speak at your next event or bring him in to your organization to inspire colleagues, executives and boards of directors.

If this post doesn’t look right in your email, please subscribe my new feed please.

Thought provoking and comprehensive as always my friend. Well done.

Trust is different from most things we earn. It is highly valued, but it can’t be bought, sometimes shared, but that window closes quickly when overused as it often is. It must be earned, but cannot be sold. Its lifespan will be as long as you are willing to nurture, respect, and care for it.

IMHO trust has a way of earning its own value and maintaining it own lifetime. Those who seek it, and want to keep it, have to live with that reality. (*Hope this makes sense.)

#RonR… #NoLetUp!

Love this so much Ted. Thank you!