The AI community is buzzing around an essay published by Matt Shumer , “Something big is happening.” In it, Shumer describes a moment when AI capabilities are far outpacing society. He argues that most individuals aren’t keeping up with the speed of the change, comparing this point in time to the “this seems overblown” phase before COVID-closures and impacts hit worldwide. His takeaway is that disruption “is happening right now,” even if it sounds crazy.

Shumer’s point is that we’re on the cusp where AI stops behaving like a tool you supervise step-by-step and starts behaving like a system that can take initiative, test its own work, iterate, and ship. His central claim is that the “this seems overblown” phase is ending, fast. In his view, we’ve hit an inflection point where the newest AI systems have shifted from “assistants” to semi-autonomous workers that can take a goal in plain English, build a complete product, test it, and iterate with minimal human involvement. The next step, is fully autonomous AI workers.

And the essay’s most viral line is the one that should bother every executive, board member: “GPT-5.3-Codex is our first model that was instrumental in creating itself.”

Said another way, AI is building the next AI.

That sentence is a headline in of itself.

So here’s a question: Who inside your organization is building the next version of your company?

AI is not only accelerating AI development, it is accelerating advantage and competitive moats. It is compressing learning cycles. It is rewarding the people and companies who can translate capability into outcomes. And it is leaving everyone else with an expensive set of pilots, under-utilized AI model licenses, a stack of policies, and a growing sense of unease they cannot quite name.

That unease is starting to take shape now. I call it AI Darwinism, the survival-of-the-most-adaptive era of business. AI Darwinism applies new selection pressure in business, created when intelligence accelerates the pace of change so dramatically that advantage shifts from size and speed to learning velocity and reinvention. This is true for organizations and people. And, it rewards organizations that evolve their operating model by combining human judgment and creativity with machine agency, redesigning work to move faster in learning, not just faster in execution.

AI Darwinism is the survival-of-the-most-adaptive era of business. It applies new selection pressure in business, created when intelligence accelerates the pace of change so dramatically that advantage shifts from size and speed to learning velocity and reinvention. And, it rewards organizations that evolve their operating model by combining human judgment and creativity with machine agency, redesigning work to move faster in learning, not just faster in execution.

I’ve seen this before. In the early 2000s, I studied the rise of digital Darwinism, which explored how businesses were and weren’t navigating the digital revolution. Now, it’s happening all over again, this time at potentially exponential scales.

NY Times Tech Writer and co-host of the Hard Fork podcastKevin Roose captured the concept of AI Darwinism in a recent post: “I [sic] follow AI adoption pretty closely, and i have never seen such a yawning inside/outside gap.” He continued, “people in SF are putting multi-agent claudeswarms in charge of their lives, consulting chatbots before every decision, wireheading to a degree only sci-fi writers dared to imagine. people elsewhere are still trying to get approval to use Copilot in Teams, if they’re using AI at all.”

I’ve been warning about for three years, even when the audience wanted GenAI news and demos and prompt tutorials instead: the disruption is not AI. The disruption is the divide it creates and the chasm it expands.

Vinod Khosla’s AI divide, translated for leaders

Vinod Khosla issued an ominous warning in 2024. “I am awe struck at the rate of progress of AI on all fronts,” he observed.

“Today’s expectations of capability a year from now will look silly and yet most businesses have no clue what is about to hit them in the next ten years when most rules of engagement will change.”

He continued, “It’s time to rethink/transform every business in the next decade.”

Vinod Khosla keeps returning to ablunt idea: AI will drive massive productivity and abundance, and it will also create violent discontinuities for jobs and incumbents. He’s explicit about both the upside and the turbulence.

Here’s the part executives should underline, stick on the wall, and charge leadership teams to explore opportunities with urgency. Khosla describes a near-term corporate glow-up: “increasing productivity, reducing costs… increasing abundance generally.” Then he warns that the same flywheel can trigger sweeping displacement.

That’s the AI divide in one frame: those who turn capability into compounding advantage, and those who wait for permission until the market moves without them.

The data says the capability gap is already real

Shumer uses lived experience. Research shows the specifics.

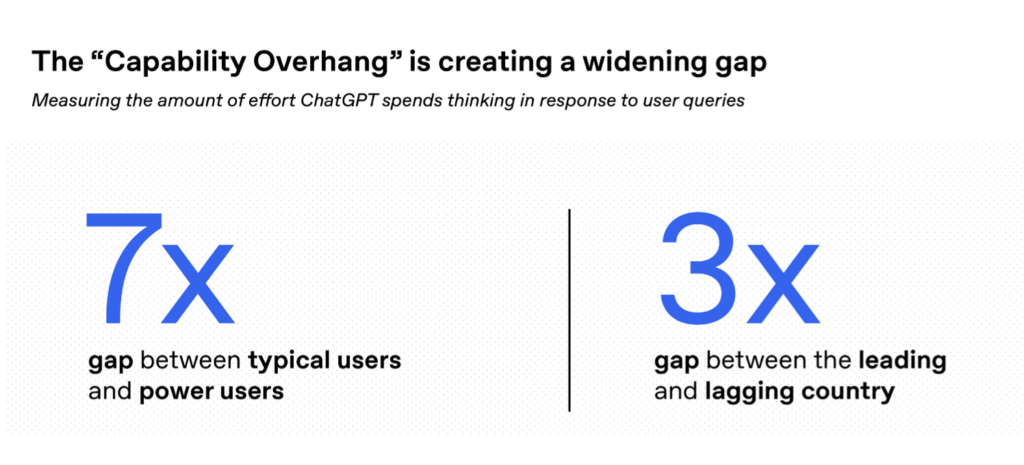

We keep talking about AI adoption as if the story is access. OpenAI’s latest research, “Ending the Capability Overhang“, argues the real story is underuse: a growing “capability overhang,” the gap between what frontier AI can do and the value most people, businesses, and countries are actually capturing at scale.

The most important takeaway from OpenAI’s research is not that models are getting smarter. We already know that. The headline is that capability is compounding faster than society and businesses are absorbing it, and that gap is becoming the new competitive divide. In OpenAI’s framing, a “capability overhang” is the widening distance between what frontier AI can do and how fully those capabilities are being adopted and integrated into real work.

In other words, the divide is not who has the most AI. It is who has the agency to apply it deeply, across real work, and to reimagine work, day after day.

The winners will be the organizations, and the people, that know how to use AI deeply, repeatedly, and responsibly. OpenAI shows this overhang is already massive, even among people with the same access.

The “typical power user” relies on about 7x more advanced “thinking capabilities” than the typical user.

That represents and AI maturity gap. It means some people are using AI for complex, multi-step work and higher-value outcomes, while others are still treating it like a search box with better manners.

Just Google “Clawdbot” to see all of the wild examples of people deploying AI agents to run their life!

Shumer’s piece describes lived experience on the inside of the curve. OpenAI is describing what happens when that curve meets the real world: capability expands, but value creation concentrates in the hands of people and organizations who know how to work with it. This is why the “AI is building the next AI” line matters. It matters because when intelligence compounds, the advantage goes to those who compound learning.

The AI gap is not a future problem. It is a present competitive condition.

What we’re missing…

Shumer is right about velocity. He’s right that public perception is lagging. He’s right that people who tried AI two years ago are evaluating a fossil record.

But the essay mostly frames the moment as a personal wake-up call about labor displacement and individual adaptation. That is important. We also need to discuss leadership, not inspirational leadership, but operational leadership.

The real constraint is not model capability. Research shows that. It is vision, AI fluency, and managerial capability.

OpenAI’s “overhang” research essentially says this out loud, adoption alone is not enough. Effective use is the differentiator.

Roose is describing the cultural version of that.

Khosla is describing the macroeconomic version of that.

AI does not replace leaders. It replaces leaders who cannot see what is happening.

If your people are “behind,” it is rarely a talent problem. It is usually a permission or empowerment problem. Leadership needs a mindshift.

This is where expert leaders matter. They can recognize the moment, tell the truth about it, and re-architect the company around it.

Using a compass to reframe the future of work and labor

Yes, AI is now building the next AI. The hopeful implication is not “we’re doomed.” The hopeful implication is that we can finally move from automation projects to reinvention programs.

If you are a CEO, CIO, COO, CHRO, CDO, or on a board, the possibility is not “AI makes employees faster.” It is:

- Faster cycle time from insight to decision to action

- Better service quality at scale

- More resilient operations

- New products that were previously uneconomic

- A workforce that spends less time on repetition and more time on learning, scaling, judgment, creativity, relationship-building, and domain imagination

But possibility does not deploy itself.

What business and technology leaders need to do now

You want your teams to feel the’re growing, not losing ground to AI. Give them a leadership upgrade, not just the tools.

1) Name the shift inside your company

If you want your teams to feel like they’re growing, not losing ground to AI, do not hand them a tool and call it transformation. Start a movementThis moment is not about adopting AI, it is about ending the capability overhang inside your company. It is about closing the gap between what is now possible and what your people are empowered to deliver. It is about turning fear into fluency, confusion into cadence, and experimentation into an operating model.

This is the leadership work. This is the bridge.

The Overhang Movement is a leadership pledge.

1) Declare the shift, out loud, in plain language.

Most organizations are stuck because nobody has named what is happening. So, name it.

We are moving from AI that answers to AI that acts, from copilots to agents, from productivity hacks to workflow reinvention, from “Can we use it?” to “What outcomes can we now deliver that we could not deliver before?”

If you cannot name the shift, and people can’t articulate it, you cannot lead it.

2) Make AI fluency a leadership standard, not an employee experiment

This is where movements win or die.

If executives outsource understanding, the organization will confuse activity for progress. Leaders must be first to practice what they expect, not performative prompting. Real use. Real workflows. Real decisions.

Set a new expectation. Every leader must run one meaningful workflow with AI weekly and share what they learned. Make curiosity measurable. Make learning contagious.

The future will not be led by the most confident leaders. It will be led by the most committed learners.

3) Redesign work around outcomes, not tasks

Tools speed up tasks while movements redesign reality.

Pick one journey your customers can feel, one process your employees resent, one area where delay costs real money and trust. Then rebuild it end to end with humans and machines in the loop.

Do not optimize the old. Replace the old.

AI ROI is not saved minutes. It is saved months, which then allow for new value exploration and creation.

4) Turn governance into an accelerator

Give people permission with boundaries. Create the policy envelope, the audit trail, the evaluation discipline, the accountability. Make governance operational and empowering.

5) Build a learning loop that compounds

Movements run on rhythm. Create a weekly cadence that replaces status updates with learning reviews. What worked. What broke. What was shipped. What we learned. What we will change.

Then scale by reuse. Lift the pattern, not just the tool. Reuse agents, reuse guardrails, reuse telemetry.

If you do not build a learning loop, you are not transforming.

6) Make the divide visible, then make it unacceptable

The overhang thrives in silence, so measure depth and outcomes:

- How many workflows are being redesigned end to end?

- How many teams are using AI for multi-step work, not single answers?

- How fast do insights become decisions, and decisions become actions?

- Where are people blocked by fear, policy ambiguity, or lack of training?

What you do not measure becomes your blind spot. What you normalize becomes your culture. You’re building a culture of learning and unlearning, and the safety and permission to ask questions and explore.

7) Lead with hope, backed by competence

People are afraid of being left behind or replaced by AI. Your job is to swap anxiety with agency. Show them the path. Train them. Equip them. Protect them. Celebrate progress. Make reinvention something people join, not something that happens to them.

Hope is more than a feeling. Hope is a plan people can follow.

AI Darwinism is here, but so is your choice

Kevin Roose is right to call out the “inside/outside gap” because it’s now visible, cultural, and compounding.

Shumer is living the agency shift inside an AI Native org and sees the divide from the front lines.

Vinod Khosla is right to hold two truths at once. AI can unleash productivity and abundance, and it can also widen inequality and displace work at a pace leaders are not prepared to manage.

And OpenAI is right to quantify the capability overhang, the widening distance between what these systems can do and what most people and organizations are actually doing with them.

AI Darwinism is not about the strongest companies winning. It’s about the most adaptive.

In nature, survival does not go to the biggest or the most experienced. It goes to the ones that learn faster, evolve sooner, and change their behavior before the environment forces them to. That is the AI era in one sentence.

AI is rewriting the conditions of competition in real time.

This is where “AI is building the next AI” becomes a leadership mirror.

If intelligence is now compounding itself, then the pace of disruption will not be linear. It will be exponential.

The question is whether your organization will be better because you learned how to build with AI as it evolves, govern it, and redesign work around it.

Here’s the leadership pledge I want you to take into your next executive meeting:

- We will not treat AI as a tool. We will treat it as a new operating model.

- We will not run pilots forever. We will redesign workflows end-to-end.

- We will not ban curiosity. We will build safe systems for it.

- We will not measure AI by output volume. We will measure it by outcomes and learning velocity.

- We will not let the AI divide become a talent crisis. We will lead the transition.

In the age of AI Darwinism, your greatest competitive advantage is the organization you become with AI.

The future won’t reward the companies thatadopt AI.

It will reward the ones that adapt because of it.

And this is where the hopeful story lives.

The opportunity for leaders is to turn that into hope at scale.

Mind the gap. Close the gap.

Read Mindshift | Book Brian as a Speaker | Subscribe to Brian’s Newsletter

HB Planning Service provides accurate and professional Floor Plans in Kent tailored for residential extensions, loft conversions, and refurbishment projects. Their detailed drawings ensure planning compliance and smooth approvals, helping homeowners achieve well designed spaces with clarity, efficiency, and long term value.

I really liked this viewpoint! The remark regarding the AI gap, which extends beyond adoption, is particularly poignant. What really sets adaptable organisations apart from the others is not the technologies, but rather the extent to which we incorporate AI into our everyday work and leadership philosophy. A thought-provoking book!