What do real workers want from AI? And how does that differ from what AI experts think?

Let’s explore an insightful study recently published by Stanford University Social and Language Technologies Lab, “Future of Work with AI Agents.”

Drawing on over 2,100 tasks across 104 occupations, the research compares how workers and AI experts think about automation (what AI should do on its own) and augmentation (where AI should collaborate with humans).

Two groups were surveyed:

- 1,500 domain workers — the people doing the jobs.

- 52 AI experts — those building the tools.

The results are grounded in O*NET, the U.S. Department of Labor’s job database, and offer a rare task-level view into where humans want to stay involved, and why.

The key takeaway? Workers don’t just want to keep their jobs; they want AI to work with them, not instead of them, especially in areas involving communication, judgment, and human connection. But don’t stop here. There’s so.much.more.

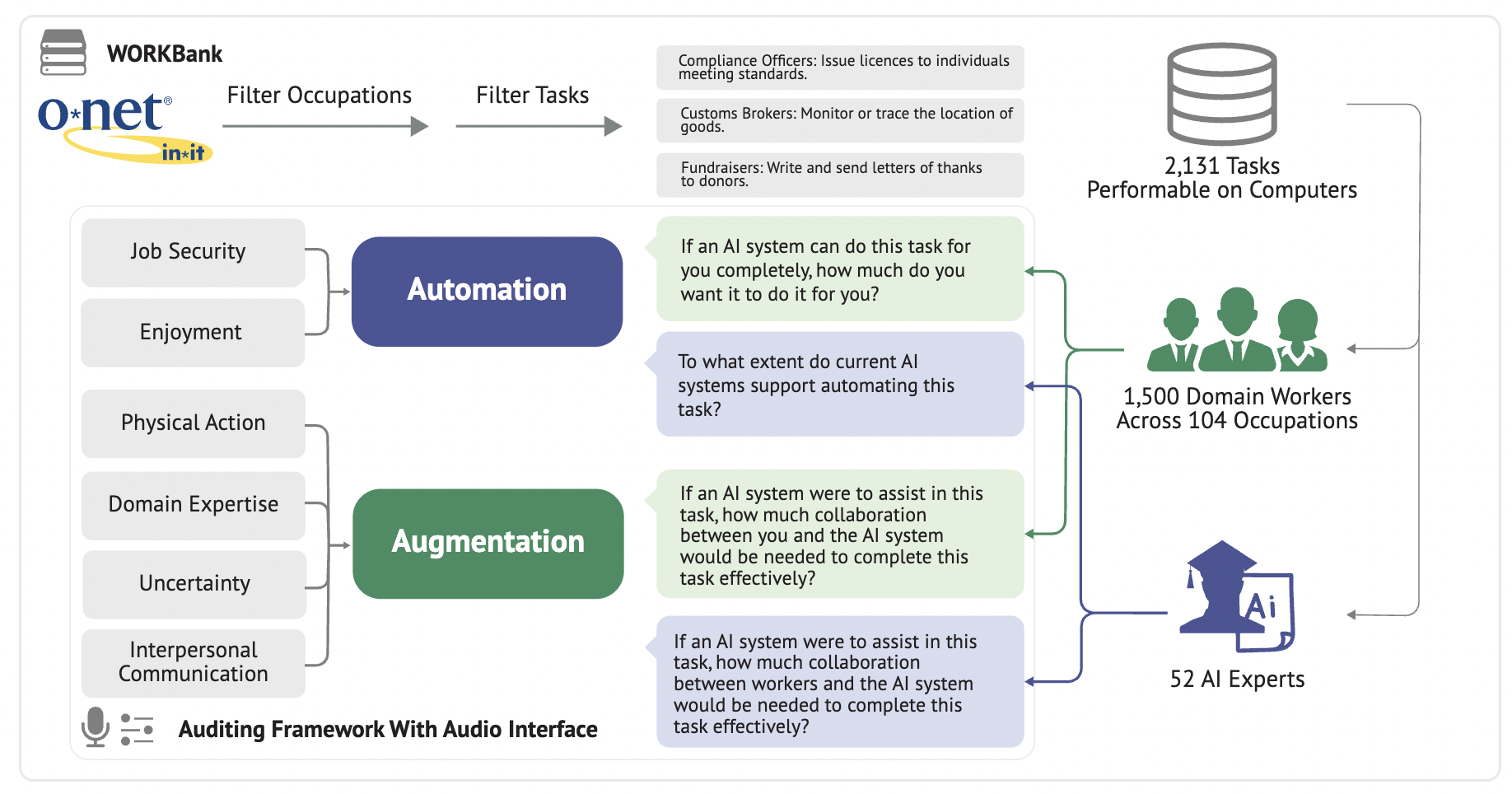

This first visual is a snapshot from a large-scale research effort aimed at answering a critical question:

How do workers and AI experts differ in their views on which tasks should be automated — and which should be augmented by AI?

The study analyzes over 2,100 tasks across 104 occupations, using task-level data to explore two key dimensions:

- How much human involvement do workers want in their day-to-day tasks?

- How much AI involvement do experts believe is technically feasible?

Two key perspectives were measured:

- Automation (blue): Tasks that AI could perform entirely on its own — and how workers feel about that possibility.

- Augmentation (green): Tasks where AI could assist humans — and the ideal level of collaboration between the two.

The researchers surveyed two distinct groups:

- 1,500 domain workers — people currently performing these tasks in the real world

- 52 AI experts — technical professionals evaluating AI’s capabilities

Each group was asked:

- For automation: Can AI do this task alone? Should it?

- For augmentation: If AI helps, what’s the right balance between human and machine input?

The findings reveal several drivers behind workers’ preferences:

- Job security and enjoyment tend to make workers resist full automation.

- Tasks requiring expertise, judgment, or interpersonal interaction lean heavily toward augmentation.

- Physical tasks fall somewhere in between, depending on the nature of the work.

In short, this research highlights the nuanced ways people think about AI — not just as a tool, but as a collaborator, and sometimes, as a competitor.

Over 70 million U.S. workers are on the brink of the biggest workplace transition in decades! And it’s being driven by AI agents.

As technology advances, worker perspectives are too often left out of the conversation.

So, what do workers actually want AI to automate, and where do they prefer human-AI collaboration? Oh, and how do those preferences stack up against what the technology can realistically do?

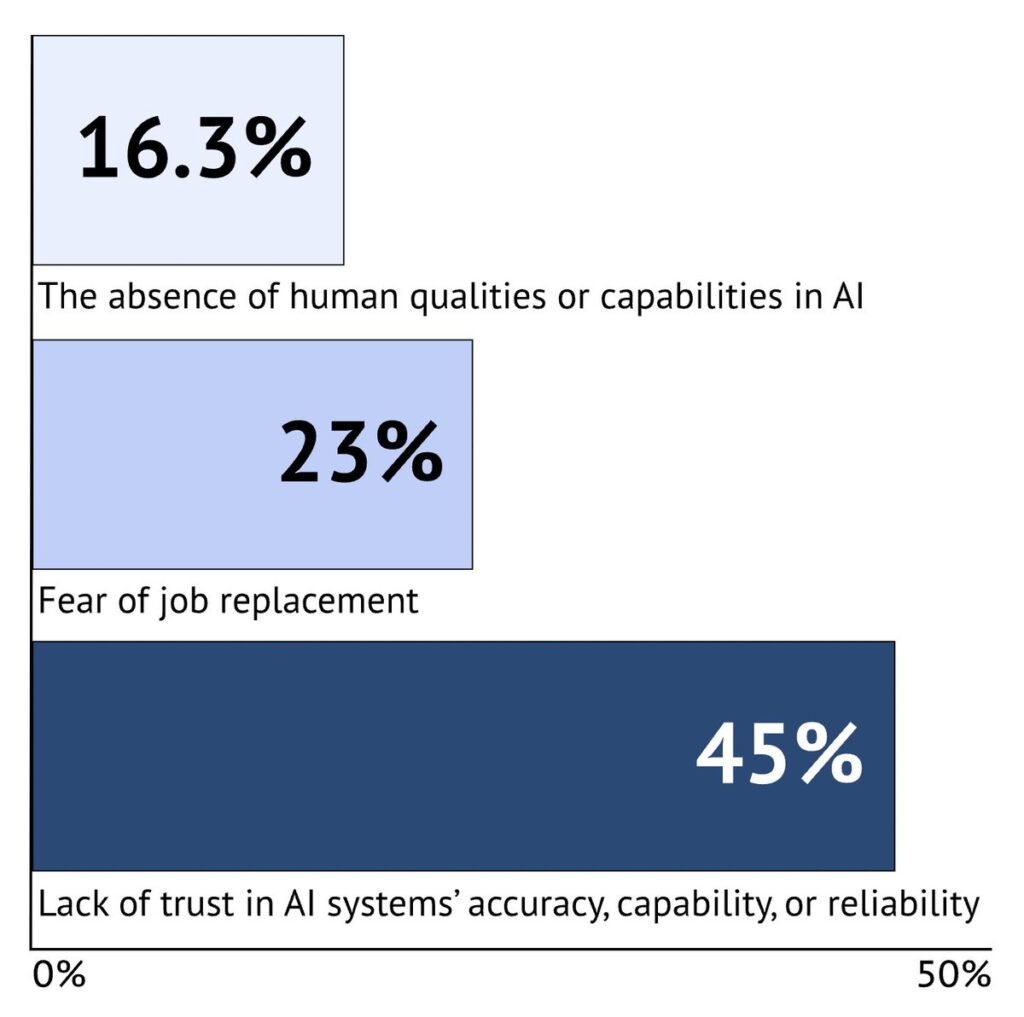

As part of the study, workers shared their top concerns about AI’s role in their jobs:

- 45% said they don’t trust AI to handle their tasks responsibly

- 23% fear being replaced entirely

- 16% worry about the loss of the human touch in their work

These aren’t just statistics, they’re usable signals. Signals that any future of work built with AI needs to be designed for people, not just for performance or stakeholders or shareholders.

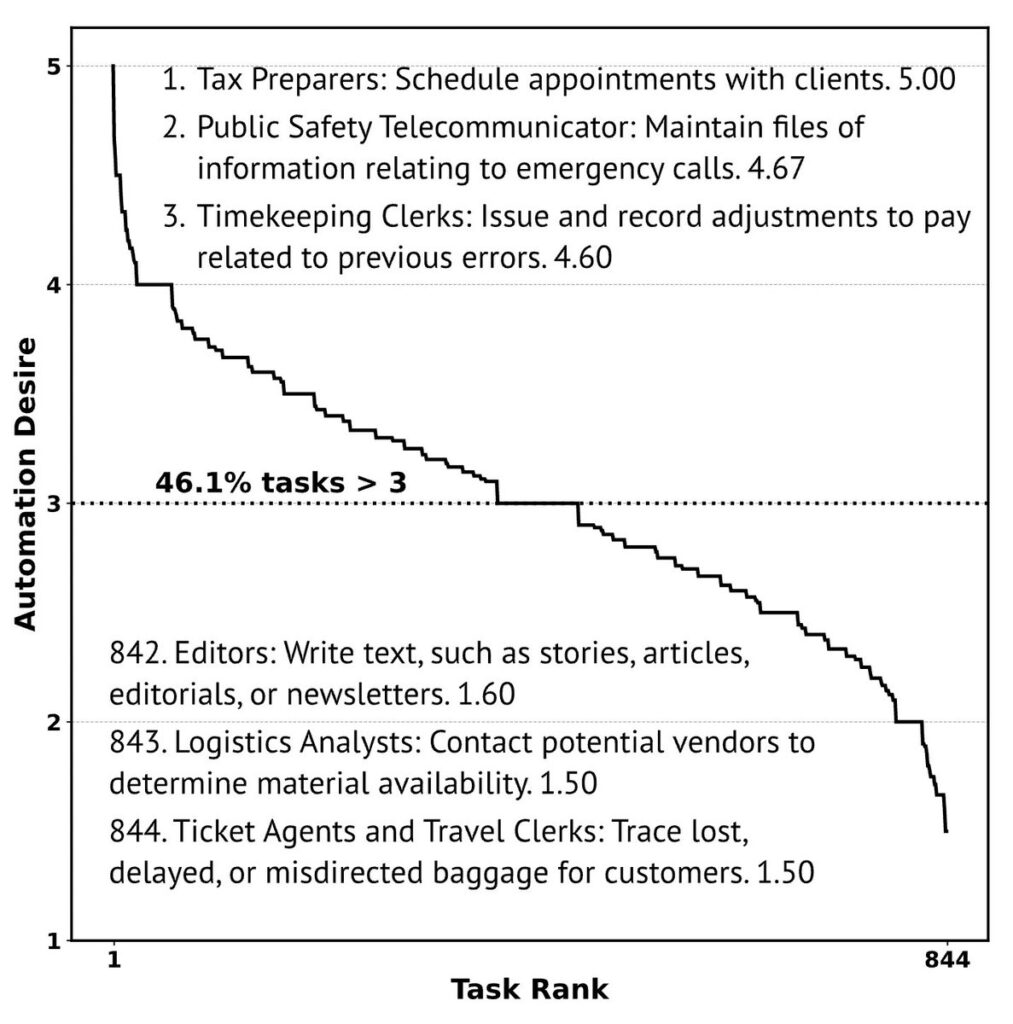

Despite widespread concerns, nearly half of all workers are open to AI automation — under the right conditions.

In the study, 46.1% of tasks were rated positively for full AI automation by the workers who actually perform them. That means nearly half the time, people said they would welcome AI doing the task entirely…even after considering risks like job loss and reduced enjoyment.

This signals a key nuance: workers aren’t simply resistant to AI, they’re open to it when it offloads routine, repetitive, or less meaningful work. As the popular ideology goes, this frees them to focus on what matters more.

The challenge isn’t just what AI can do. It’s what people are willing to let go of…and why. So, so, so, important and under-explored!

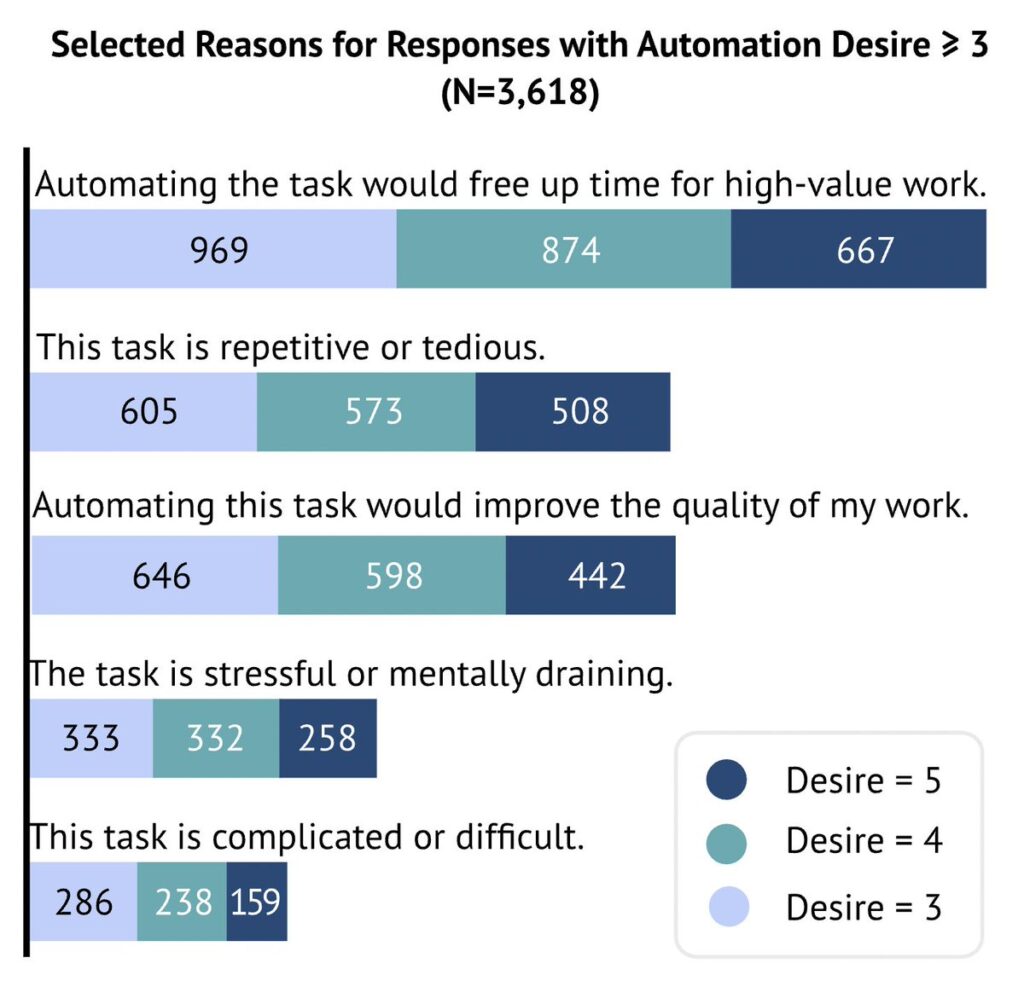

Why do workers want AI to automate parts of their jobs?

When workers express a desire for automation, it’s not because they want to do less — it’s because they want to do better.

Among the 3,618 task responses where workers rated their automation desire at 3 or higher (on a 5-point scale), the most common motivations were:

- 69.4% — Freeing up time for high-value work

- 46.6% — The task is repetitive or tedious

- 46.6% — Automation could improve work quality

- 25.5% — The task is stressful or mentally draining

While some also cited tasks being complicated or difficult, the bigger theme was clear: Workers are most open to automation when it relieves low-value, exhausting, or repetitive work, giving them more time and mental space (yes please) for the parts of their job that matter most.

In other words, automation isn’t just about efficiency. It’s about making room for meaning.

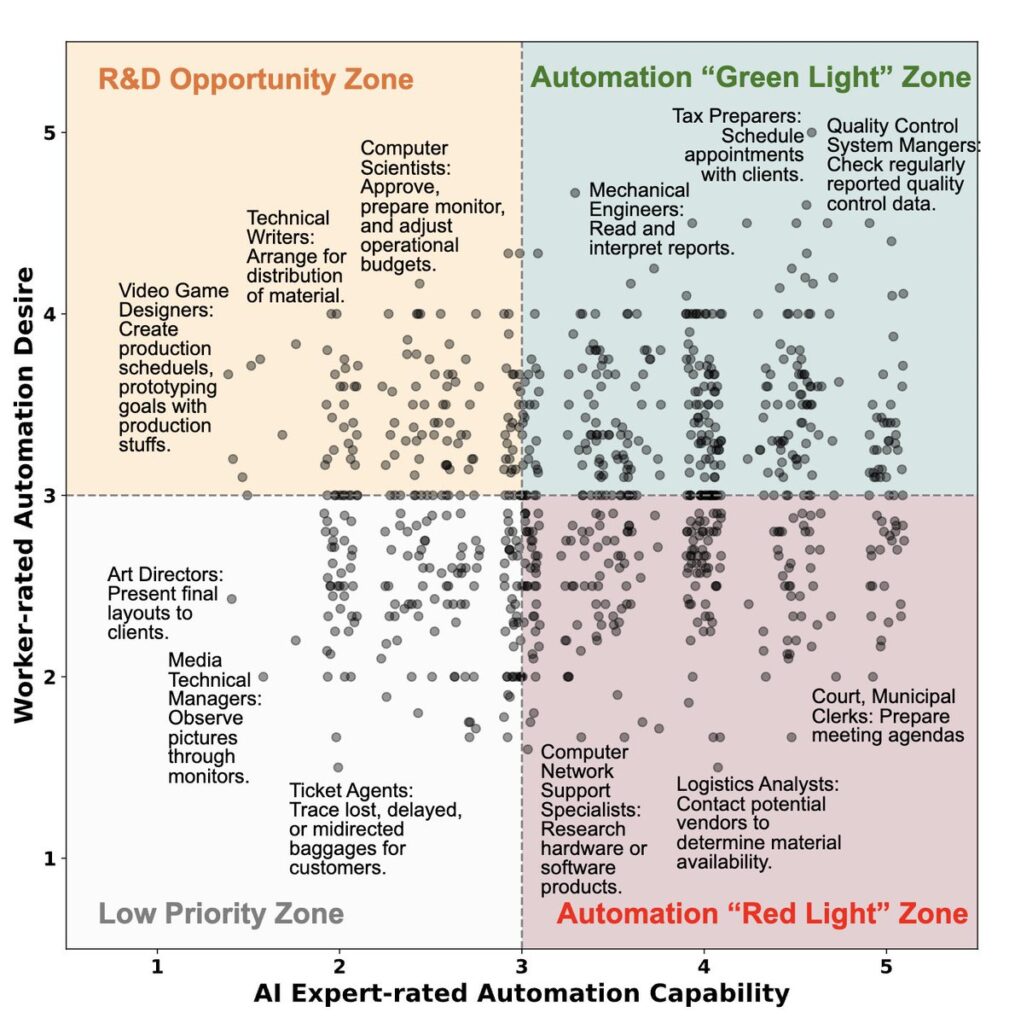

Not all tasks are created equal…AND…not all should be automated.

To help guide where AI agent investments should (and shouldn’t) go, Stanford researchers identified four distinct “task zones” based on two key factors:

- Worker desire for automation

- AI expert assessment of technical capability

These zones provide a framework to align technological potential with human values:

1. Automation “Green Light” = Zone High worker desire, high AI capability.

These tasks are ideal candidates for automation. They promise strong productivity gains and broad worker support, making them low-friction wins for AI deployment.

2. Automation “Red Light” = Zone Low worker desire, high AI capability.

Technically, AI can handle these tasks, but workers don’t want it to. Automating here risks resistance, lower morale, or broader social backlash. Caution is advised.

3. R&D Opportunity Zone = High worker desire, low AI capability.

Workers want help here, but AI isn’t ready yet. These tasks point to valuable frontiers for research and innovation, where investment could deliver strong future payoff.

4. Low Priority Zone = Low worker desire, low AI capability.

These tasks are unlikely to benefit from automation in the near term, and workers aren’t asking for it. It’s probably best to deprioritize for now.

Automation vs. Augmentation: Not everything should be fully Handed off to AI

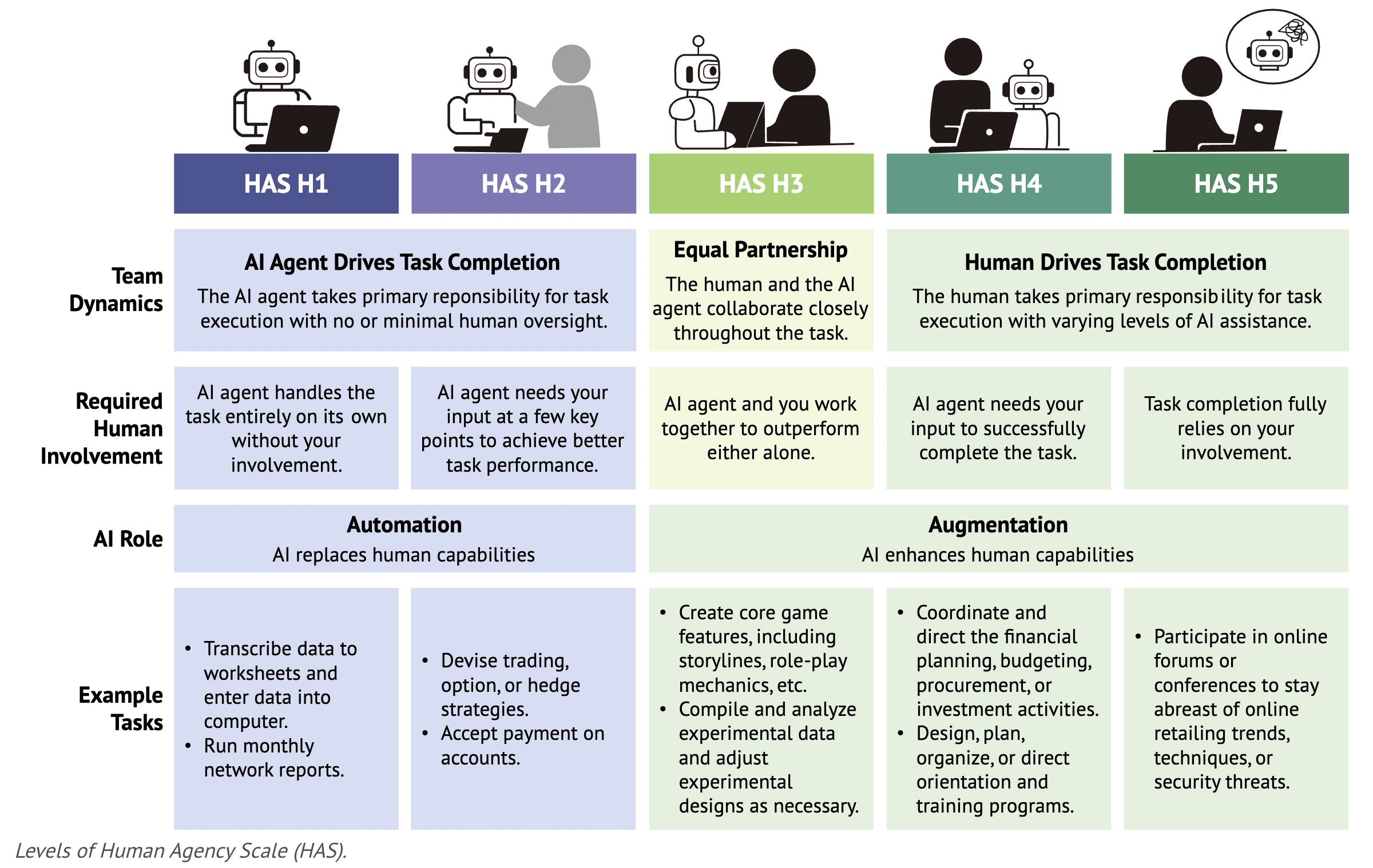

While much of the conversation around AI focuses on automation, Stanford’s research also explored the other side of the equation: augmentation. This is where AI supports, rather than replaces, human effort.

To analyze this, the team introduced a new framework: the Human Agency Scale (HAS).

The HAS is a five-level scale that measures how essential human involvement is for a task to be done well — from H1 (fully autonomous) to H5 (human-led and AI-assisted…if at all).

The HAS centers on human value, judgment, and the need for involvement, even in cases where automation is technically possible.

This shift from an “AI-first” model to a “human-centered” one offers a more nuanced way to decide not just what can be automated, but what should be augmented…and why.

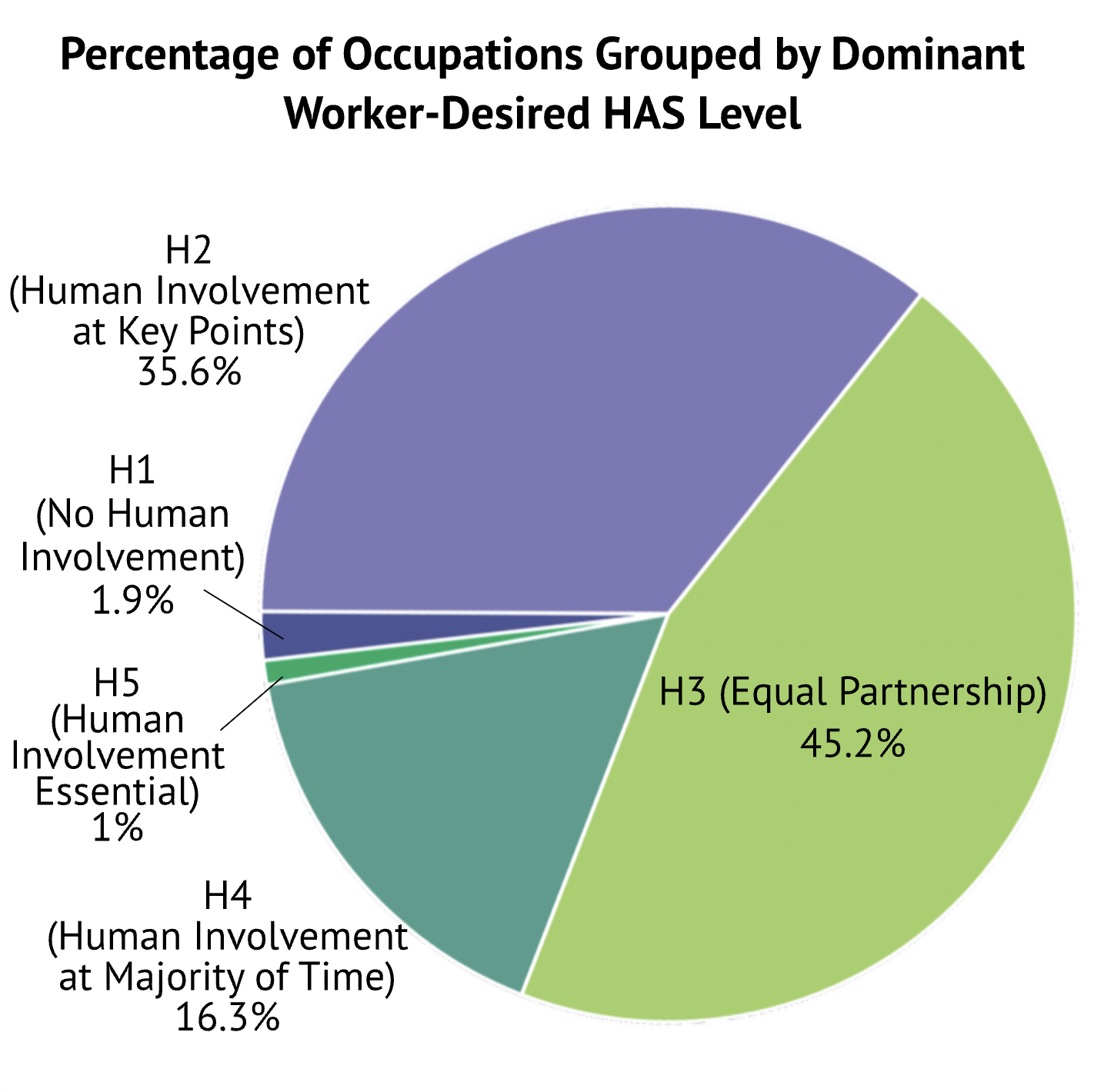

Most workers don’t want AI to take over, they want a partner.

Stanford’s research shows that across many occupations, workers aren’t looking for full automation. I mean, who is!? Instead, they prefer a collaborative relationship with AI, one that balances machine support with human judgment.

On the Human Agency Scale, this balanced view is captured by H3: Equal Partnership, where humans and AI share responsibility for completing a task.

H3 emerged as the dominant preference in 45.2% of occupations (47 out of 104) — making it the most common worker-desired level overall.

Other preferences were:

- H2 (AI support with human oversight at key points): 35.6%

- H4 (Human-led, AI assists most of the time): 16.3%

- H1 (Full automation): 1.9%

- H5 (Human-only, no AI): 1.0%

The takeaway? Even as AI grows more capable, workers want to stay meaningfully involved. They’re not asking to be replaced. As if that were a thing! They’re asking for shared control, trust, and agency.

Workers want more control than AI experts think is necessary

As AI capabilities advance, a growing gap is emerging, not in what’s possible, but in what people actually want.

Stanford’s analysis shows that in 47.5% of the 844 tasks studied, workers prefer to retain more human involvement than AI experts believe technically necessary. What this means is that workers are leaning toward higher levels of human agency, even when AI could, in essence, take on more of the task.

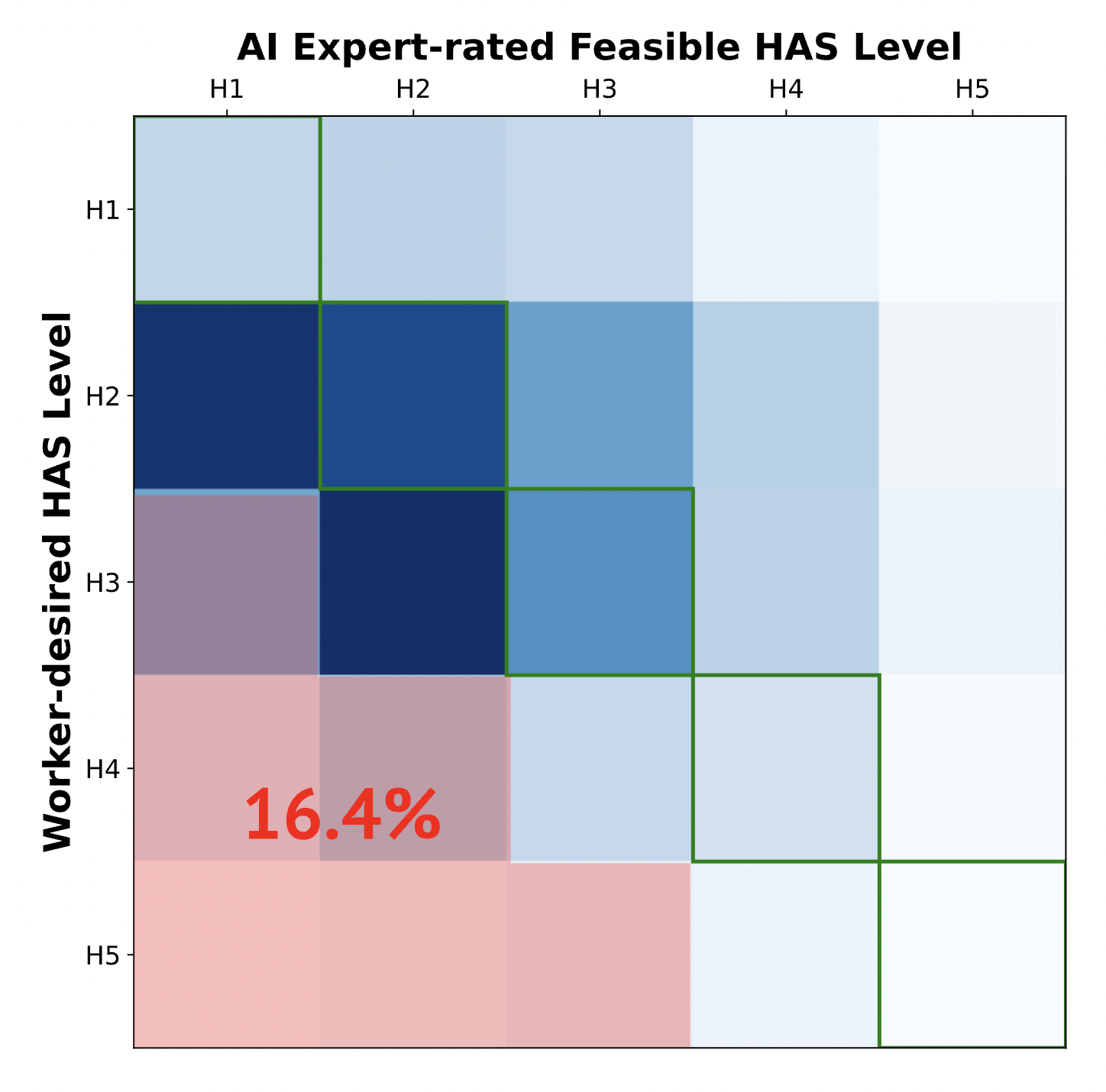

This misalignment is clearly visible in the heat map above.

- Cells below the diagonal (like H4 worker preference vs. H2 expert feasibility) show tasks where workers want more involvement than AI experts recommend.

- That red-shaded area (16.4%) highlights just one such region. This is a clear signal of emerging tension.

The implication? As AI gets more powerful, it’s not just a question of what we canautomate, but whether people will trust, accept, and support that automation. And that right there is all about vision and comms.

Designing AI for the workplace means building with human values at the core, not just technical efficiency. But, that’s been true with every technology revolution.

Automation isn’t one-size-fits-all; even within the same job

Different tasks within a single job may require very different levels of human involvement, trust, and judgment, and should not be automated in the same way. 100%!

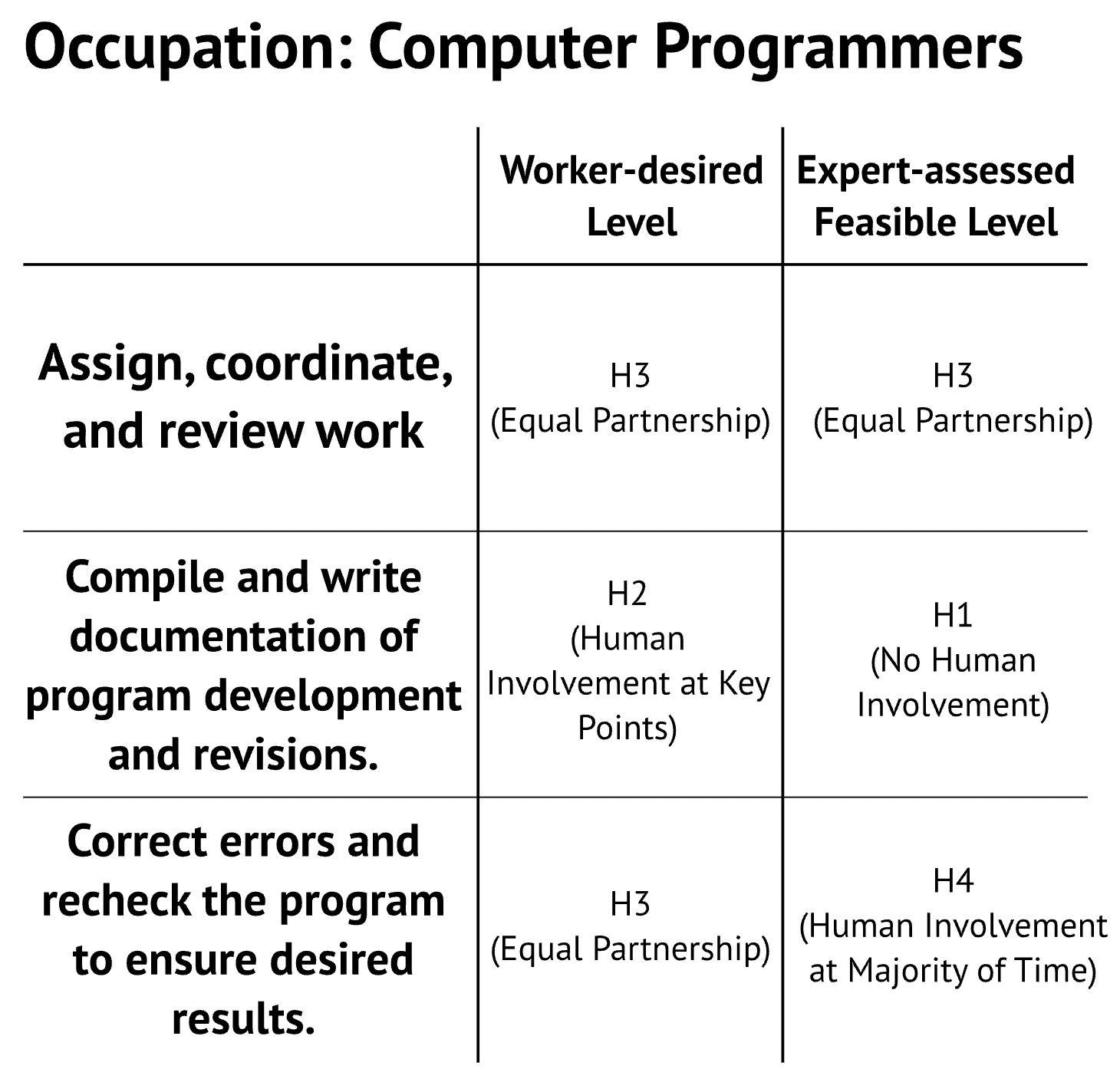

Take the role of a computer programmer: it’s highly technical, data-driven, and already deeply intertwined with digital tools. And yet, when you break it down task by task, the desired role of AI varies dramatically.

According to Stanford’s study:

- For assigning and reviewing work, both workers and experts aligned on equal partnership (H3).

- For writing documentation, AI experts saw no need for human input (H1), but workers still preferred key-point involvement (H2).

- For debugging and correcting errors, workers leaned toward equal collaboration (H3), while experts rated it feasible with less human input (H4).

This mismatch shows that even in high-tech roles, trust and control are critical.

If AI agents are deployed in high-agency tasks without human in the loop design, the risks go beyond performance:

- Users may reject or ignore the tools.

- They may override AI outputs entirely.

- They may feel disempowered and morale will sink, eroding trust and job satisfaction.

AI success depends as much on human alignment as it does on technical capability.

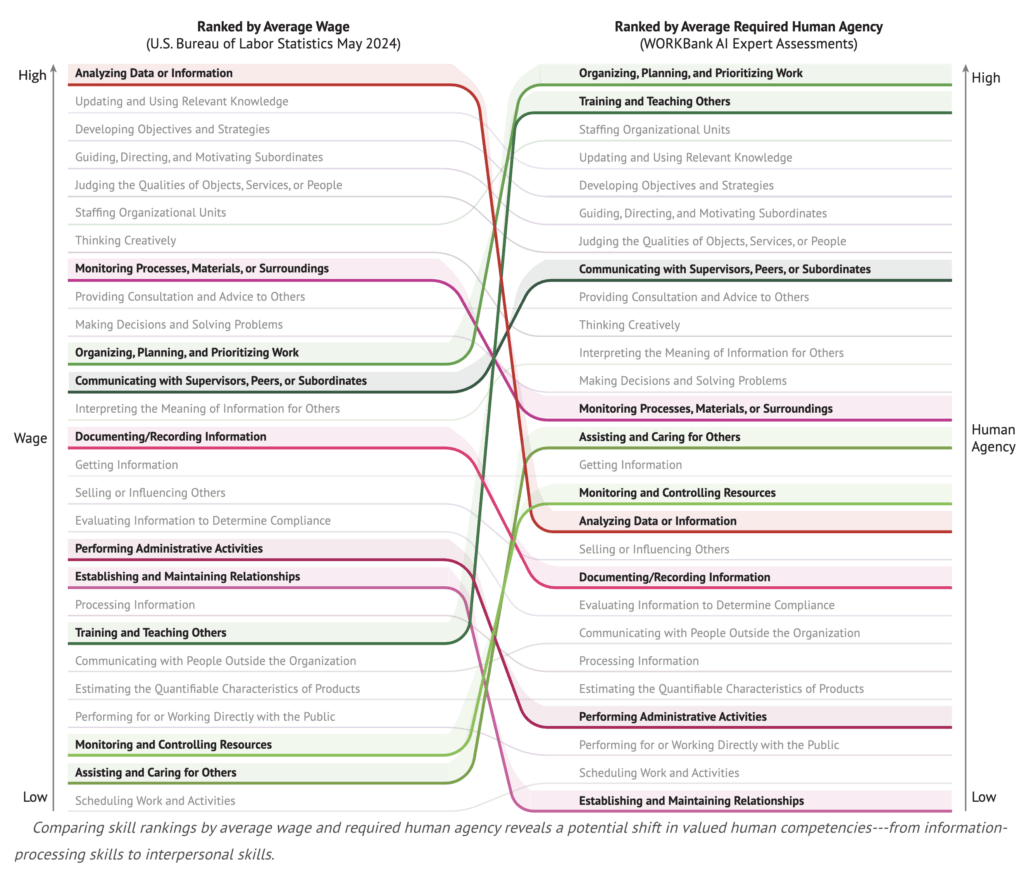

What happens when we value skills by human involvement and not just pay?

This chart above offers a powerful perspective shift: Instead of ranking skills by how much they currently pay, it ranks them by how much human agency AI experts believe they require.

When we compare these two views, 1) economic, 2) human-centered, three key trends emerge:

1. Information-processing skills may decline in relative value

Skills like analyzing data, updating knowledge, and documenting information rank high by wage, but lower in required human agency. As AI becomes better at handling these tasks, their reliance on human input, and their unique value as human work, may shrink. *sigh*

2. People and coordination skills become more essential

Tasks like organizing work, training others, and communicating with peers may not always top wage charts, but they dominate in terms of human involvement. These are skills that resist full automation because they rely on emotional intelligence, situational awareness, critical thinking, and collaboration.

3. High-agency skills are broad, interpersonal, and deeply human

Tasks requiring high human agency span a wide range…from planning and teaching to decision-making and motivating others. They reflect not just what people do, but how they lead, guide, and connect with others.

As AI handles more of the technical, repetitive, or informational work, the skills that will define human value are the ones that machines can’t replicate: Judgment. Empathy. Leadership. Trust.

This is a shift in what we value in work, and in each other.

As AI advances, human skills will matter more than ever

As machines take on more routine, data-driven tasks, the skills that truly differentiate people, collaboration, leadership, communication, and nuanced judgment, will only grow in importance.

To build a future of work that works for everyone, here are three essential takeaways:

1. AI adoption must be task-aware, not job-aware.

Jobs are made up of many tasks, and, not all are created equal. Some tasks within a single role may be ideal for automation, while others require thoughtful human-AI collaboration. Successful AI implementation depends on understanding this task-level nuance.

2. There’s a gap between technical feasibility and worker comfort.

Workers often want more control and involvement than AI experts think is necessary. Ignoring this mismatch could slow adoption, reduce trust, or lead to resistance. Managing that tension is critical. Anticipating it is now an option. And it all must be done with thoughtfulness, transparency, and feedback.

3. Use the Human Agency Scale to guide responsible transformation.

The HAS framework helps organizations identify which tasks should be:

- Automated,

- Augmented,

- Or left entirely human.

By anchoring AI strategy in human-centered design and empathy, companies can deploy tools and AI agents people actually want to use and work with. This will lead to better adoption, more trust, and more responsible innovation.

Remember…AI doesn’t replace people, it reshapes work. That’s if you want it to. It’s a choice. Let’s make sure it does so in ways that elevate what makes us human and what makes us better with AI and makes AI better with us!

Leave a Reply